Whitepaper | July 2025

Version: EN | VN

High Density Fiber Connectivity for Hyperscale Data Centers

Nguyen X. Nguyen (1), Rick Nguyen (1), Hieu M. Bui (1), Naoya Wada (2)

(1) VAFC Global Pte. Ltd., Singapore (a spin-off from SVI Group, Vietnam)

(2) Advanced ICT Research Institute, National Institute of Information and Communications Technology, Tokyo, Japan

1. Executive Summary

The explosion of data and AI workloads is straining the limits of conventional optical infrastructure in hyperscale data centers. Multi-Core Fiber (MCF) technology offers a compelling solution by packing multiple independent fiber cores into a single strand. This spatial multiplexing dramatically multiplies per-fiber bandwidth while reducing cabling bulk. Key advantages include higher transmission capacity (e.g. a 4-core MCF can deliver nearly 4× the throughput of a standard SMF with same standard 125 µm cladding and ≈80% cable-tray space savings, as demonstrated in Fujikura’s 1152-channel field-cable trial [1]), and potential cost and power efficiency gains from consolidating links. MCF’s ability to integrate many channels in one fiber enables simpler network architecture and scalability to “AI factory” requirements that demand massive, low-latency interconnects

Recent developments by leading institutes and vendors (NICT, Sumitomo Electric, etc.) have brought MCF from the lab to field trials and initial commercialization [2, 3]. While technical challenges like core-to-core crosstalk and specialized connectivity remain, these are being actively mitigated through fiber design (trench-assisted cores, optimized core layouts) and new fan-in/fan-out devices. In short, MCF has matured into a viable next- generation fiber solution. Investors and data center operators should view MCF as a strategic opportunity: it delivers orders-of-magnitude capacity gains and operational savings (footprint, cable management) critical for scaling cloud and AI data centers, all while leveraging optical technology that is increasingly ready for deployment [2, 4]. This whitepaper provides a comparative analysis of MCF versus SMF, multi-mode, and hollow-core fiber across key performance metrics, and outlines how MCF’s technical merits translate into business value for hyperscale and high-performance computing (HPC) data centers.

Figure 1. Google’s hyperscale data center campus in Midlothian, Texas. (Image source: Google Data Centers)

2. Introduction and Industry Drivers

The relentless growth of cloud computing, video streaming, and AI model training is driving exponential increases in data traffic within and between hyperscale data centers. According to TeleGeography, global international bandwidth usage grew at a compound annual rate of approximately 32% between 2020 and 2024 — a figure that reflects sustained pressure on backbone network infrastructure worldwide [5]. However, in hyperscale and AI-centric data centers, where massive GPU clusters and distributed storage systems must communicate with low latency and high throughput, actual network capacity demands are likely to surpass these global averages.

While port speeds have advanced rapidly to 400G, 800G, and beyond, the growth in east-west data traffic between servers and accelerators often outpaces these increases, necessitating the deployment of more transceivers and parallel fiber links to meet aggregate throughput requirements. Relying solely on single-core fibers (SCFs) for this scaling approach inevitably leads to physical and operational limits inside data centers, quickly exhausting duct and tray capacity and making further scaling increasingly unsustainable in dense environments.

Figure 2. Dense cabling in an internal network switch system at one of Google’s hyperscale data centers (South Carolina). (Image source: Google Data Centers)

To address these limitations, Space-Division Multiplexing (SDM) has emerged as a leading strategy to scale bandwidth without proportionally increasing fiber count [2]. Among SDM approaches, Multi-Core Fiber (MCF) is considered the most practical for near-term deployment. MCF integrates multiple single-mode cores into a standard 125 μm cladding [6], enabling significant increases in per-fiber throughput while maintaining compatibility with existing cabling infrastructure.

This report evaluates MCF in comparison to legacy fiber types, including single-core, multi-mode, and hollow-core fiber, across key performance and deployment metrics. We focus particularly on intra-data center use cases (short-reach links between racks, AI clusters, and silicon photonics modules), while also considering inter-building and metro-scale connections. Technical challenges such as inter-core crosstalk, fan-in/fan-out coupling, and fiber management are addressed through recent innovations in fiber design and packaging. Drawing from publicly available industry information — including NICT and EXAT, a community in Japan with NICT at its core that operates under IEICE and focuses on advanced fibers and components for SDM networks such as multi-core fiber, along with industry progress (Fujikura, Sumitomo Electric) — this paper outlines how MCF’s architectural advantages can be translated into business value through higher bandwidth density, reduced infrastructure complexity, and scalable, future-ready network performance.

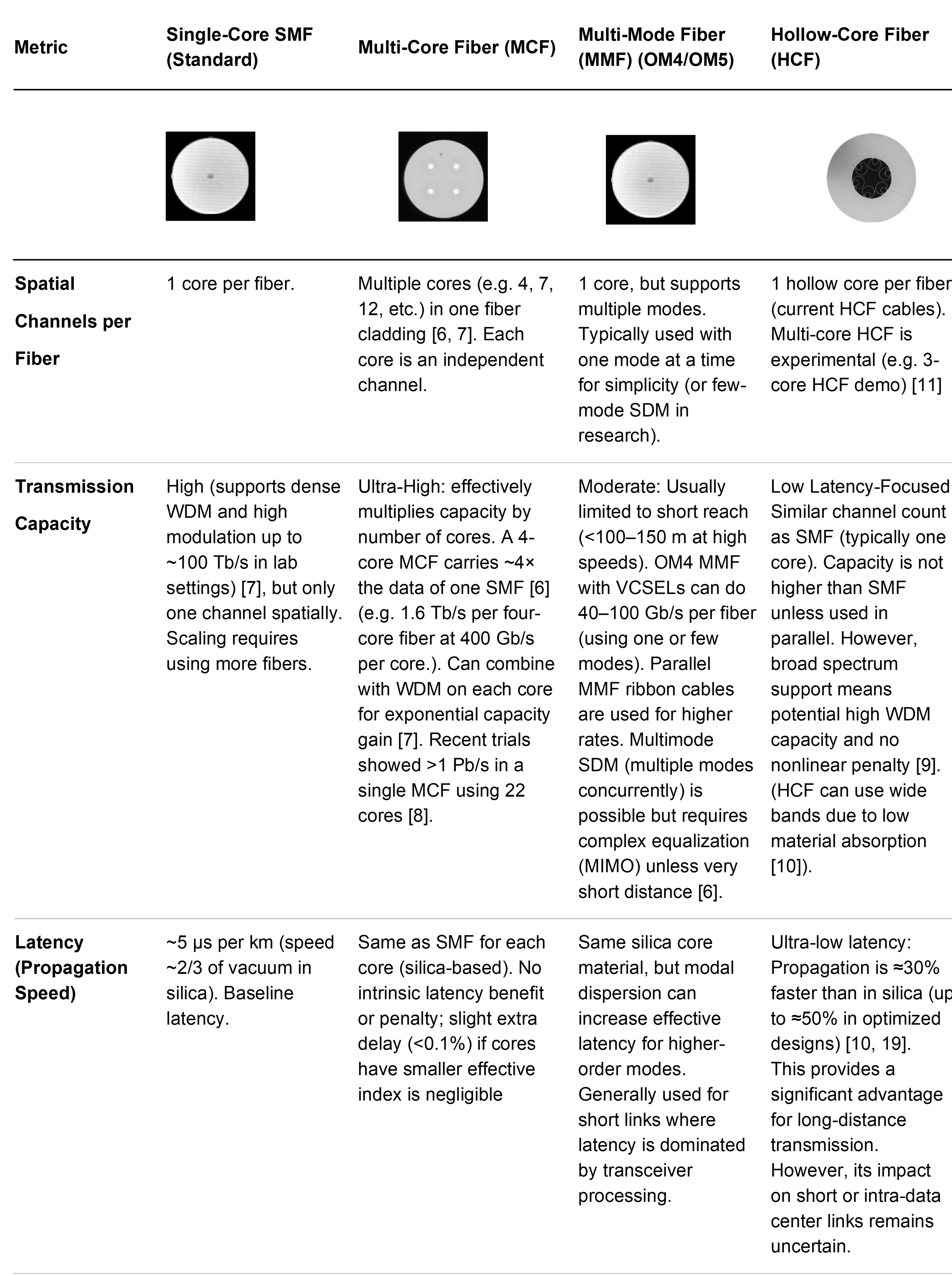

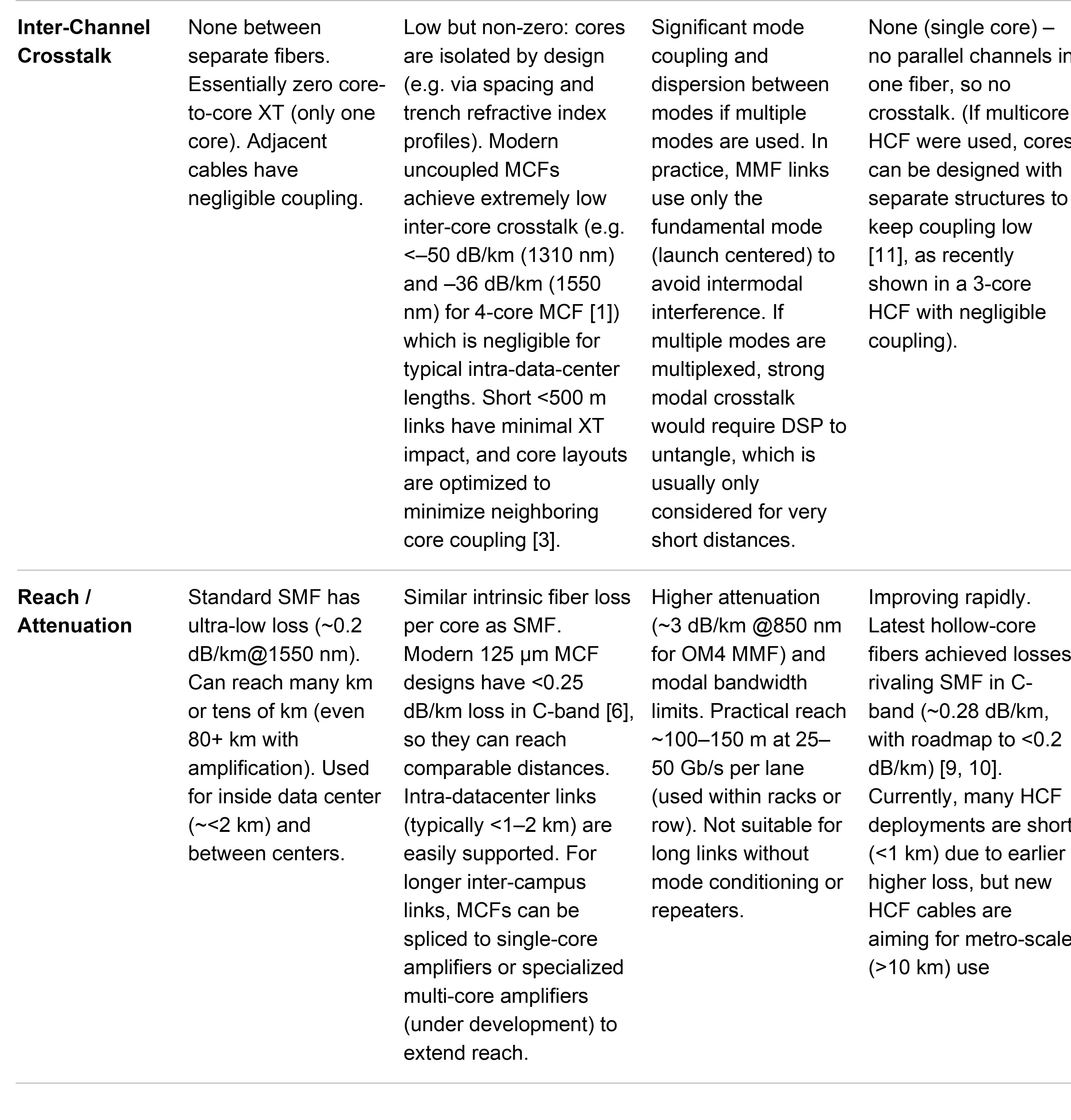

3. Comparing Fiber Technologies for Data Centers

To address these limitations, Space-Division Multiplexing (SDM) has emerged as a leading strategy to scale bandwidth without proportionally increasing fiber count [2]. Among SDM approaches, Multi-Core Fiber (MCF) is considered the most practical for near-term deployment. MCF integrates multiple single-mode cores into a standard 125 μm cladding [6], enabling significant increases in per-fiber throughput while maintaining compatibility with existing cabling infrastructure.

Metric

Single-Core SMF (Standard)

Multi-Core Fiber (MCF)

Multi-Mode Fiber (MMF) (OM4/OM5)

Hollow-Core Fiber (HCF)

Spatial

Channels per

Fiber

1 core per fiber.

Multiple cores (e.g. 4, 7, 12, etc.) in one fiber cladding [6, 7]. Each core is an independent channel.

1 core, but supports multiple modes. Typically used with one mode at a time for simplicity (or few-mode SDM in research).

1 hollow core per fiber (current HCF cables). Multi-core HCF is experimental (e.g. 3-core HCF demo) [11]

Transmission

Capacity

High (supports dense WDM and high modulation up to ~100 Tb/s in lab settings) [7], but only one channel spatially. Scaling requires using more fibers.

Ultra-High: effectively multiplies capacity by number of cores. A 4-core MCF carries ~4× the data of one SMF [6] (e.g. 1.6 Tb/s per four-core fiber at 400 Gb/s per core.). Can combine with WDM on each core for exponential capacity gain [7]. Recent trials showed >1 Pb/s in a single MCF using 22 cores [8].

Moderate: Usually limited to short reach (<100–150 m at high speeds). OM4 MMF with VCSELs can do 40–100 Gb/s per fiber (using one or few modes). Parallel MMF ribbon cables are used for higher rates. Multimode SDM (multiple modes concurrently) is possible but requires complex equalization (MIMO) unless very short distance [6].

Low Latency-Focused: Similar channel count as SMF (typically one core). Capacity is not higher than SMF unless used in parallel. However, broad spectrum support means potential high WDM capacity and no nonlinear penalty [9]. (HCF can use wide bands due to low material absorption [10]).

Latency (Propagation Speed)

~5 μs per km (speed ~2/3 of vacuum in silica). Baseline latency.

Same as SMF for each core (silica-based). No intrinsic latency benefit or penalty; slight extra delay (<0.1%) if cores have smaller effective index is negligible

Same silica core material, but modal dispersion can increase effective latency for higher-order modes. Generally used for short links where latency is dominated by transceiver processing.

Ultra-low latency: Propagation is ≈30% faster than in silica (up to ≈50% in optimized designs) [10, 19]. This provides a significant advantage for long-distance transmission. However, its impact on short or intra-data center links remains uncertain.

Inter-Channel Crosstalk

None between separate fibers. Essentially zero core-to-core XT (only one core). Adjacent cables have negligible coupling.

Low but non-zero: cores are isolated by design (e.g. via spacing and trench refractive index profiles). Modern uncoupled MCFs achieve extremely low inter-core crosstalk (e.g. <–50 dB/km (1310 nm) and –36 dB/km (1550 nm) for 4-core MCF [1]) which is negligible for typical intra-data-center lengths. Short <500 m links have minimal XT impact, and core layouts are optimized to minimize neighboring core coupling [3].

Significant mode coupling and dispersion between modes if multiple modes are used. In practice, MMF links use only the fundamental mode (launch centered) to avoid intermodal interference. If multiple modes are multiplexed, strong modal crosstalk would require DSP to untangle, which is usually only considered for very short distances.

None (single core) – no parallel channels in one fiber, so no crosstalk. (If multicore HCF were used, cores can be designed with separate structures to keep coupling low [11], as recently shown in a 3-core HCF with negligible coupling).

Reach / Attenuation

Standard SMF has ultra-low loss (~0.2 dB/km@1550 nm). Can reach many km or tens of km (even 80+ km with amplification). Used for inside data center (~<2 km) and between centers.

Similar intrinsic fiber loss per core as SMF. Modern 125 μm MCF designs have <0.25 dB/km loss in C-band [6], so they can reach comparable distances. Intra-datacenter links (typically <1–2 km) are easily supported. For longer inter-campus links, MCFs can be spliced to single-core amplifiers or specialized multi-core amplifiers (under development) to extend reach.

Higher attenuation (~3 dB/km @850 nm for OM4 MMF) and modal bandwidth limits. Practical reach ~100–150 m at 25–50 Gb/s per lane (used within racks or row). Not suitable for long links without mode conditioning or repeaters.

Improving rapidly. Latest hollow-core fibers achieved losses rivaling SMF in C-band (~0.28 dB/km, with roadmap to <0.2 dB/km) [9, 10]. Currently, many HCF deployments are short (<1 km) due to earlier higher loss, but new HCF cables are aiming for metro-scale (>10 km) use

Bend Resilience

Standard SMF per ITU G. 657.A1 can handle ~10 mm bend radius with low loss. Well-understood handling.

Good with proper design: 125 μm cladding MCFs can meet G.657.A1 bend standards. Tight bends induce slightly more coupling loss between cores, but trench-assisted cores and robust coating mitigate this. MCF with larger cladding (>200 μm) are less flexible and mainly for long-haul where bends are gentle. For data centers, standard-diameter MCF is preferred for strong bend resilience [6].

MMF (50 μm core, 125 μm cladding) is quite bend-tolerant (OM4 fiber often also meets bend-insensitive specs). Typically used in patch cables without issue at tight bends (though very sharp bends can cause mode distortions).

Early HCF could be bend-sensitive (micro bending can induce loss). Newer designs (nested anti-resonant structures) are improving bend tolerance, but HCF still usually requires slightly larger bend radius to maintain low loss. Cable designs (e.g. with protective structures) help. Overall handling is getting closer to SMF norms, but careful installation is needed to avoid kinks or crushing the air core.

Transceiver Technology

Uses single-mode lasers (often 1310 nm or 1550 nm) and receivers. Mature ecosystem of Ethernet and InfiniBand optics (PAM4, coherent, etc.). Each fiber pair needs its own transceiver port.

Requires similar lasers/optics per core, but enables consolidation: e.g. a single transceiver module or on-board photonic package can drive multiple cores simultaneously [6]. This yields higher density I/O. Research demos have shown pluggables with 4-core MCF coupling to 4-channel laser/ photodiode arrays [6]. Co-packaged optics with MCF output are envisioned to directly interface multi-lane silicon photonics to a single fiber connector.

Uses lower-cost VCSEL lasers at 850 nm typically, with multimode photodiodes. Power per bit can be low for short reach, but scaling VCSEL arrays beyond 50 Gb/s per lane is challenging. For >100G/lane, single-mode solutions are overtaking MMF in new deployments

Can use standard single-mode transceivers at the same wavelengths – just connecting to HCF fiber. No special DSP needed purely for propagation (it’s still fundamentally single-mode). Some commercial HCF links use interface boxes but generally one can treat HCF like SMF with faster propagation. One consideration: connectors must keep the air core clean and may use expanded beam or sealed interfaces.

Connectivity & Handling

Ubiquitous standard connectors (LC, MPO, etc.) and fusion splicers. Technicians are fully trained; very “plug and play.” Each fiber carries one channel (or one duplex link). Managing large counts of fibers (bundling, labeling) is a challenge at scale but well-known.

New connector and splicing solutions required, but progressing rapidly. MCF-specific fan-in/ fan-out (FIFO) devices can split cores to individual SMFs with insertion loss <0.2–0.3 dB [6]. Custom MCF ferrules (e.g. single ferrule holding a multi-core fiber) allow direct MCF-to-MCF mating – e.g. 4-core MCF in an SC/LC-type connector. Orientation alignment is critical (fibers include a marker element to key rotation; splicers use cameras to auto-align cores and achieve low loss splices ~0.1 dB). Handling of MCF is similar to SMF once connectors/ splices are in place, and fewer fibers overall simplify cable management despite higher complexity per fiber [13].

Same connectors as SMF (LC, MPO) can be used, but usually color-coded or physically different keying to avoid confusion. Very familiar to technicians in enterprise settings. No special alignment issues (only one core). However, legacy MMF links must be carefully power-budgeted for short reach, and they cannot be extended easily beyond designed lengths. Managing parallel MMF ribbons is similar to parallel SMF ribbons.

Typically comes pre-terminated with specialized connectors or pigtailed transceivers to avoid field termination (fusion splicing HCF is nontrivial due to the air gap). Current HCF deployments often use fixed lengths with connectors at ends, and vendors provide training. Extra care to keep the hollow core free of dust/humidity is needed. Overall handling is improving with robust cabling (e.g. Lumenisity’s hollow-core cables put HCF in protective jacketing for data centers). Technicians may require slight retraining for best practices.

Table 1: Comparison of Single-Core Fiber, Multi-Core Fiber, Multi-Mode Fiber, and Hollow-Core Fiber for Key Data Center Metrics. MCF stands out in spatial capacity and density, while HCF provides unique latency benefits. (Metrics assume typical implementations: e.g. SMF and MCF using single-mode 1310/1550 nm signals; MMF using 850 nm VCSEL links; HCF using single-mode 1310/1550 nm in hollow core.)

4. MCF Advantages for Intra-Data Center Deployment

Within a data center, optical links are typically short (tens to hundreds of meters, up to ~2 km) and high-bandwidth, connecting leaf-spine switches, server racks, and increasingly, AI accelerator pods. These links form the fabric of AI clusters, where dozens or hundreds of GPU/TPU units must exchange data in parallel with minimal bottlenecks.

Figure 3. Network configuration example and physical layer parameters for data center (Image source: Optica Publishing Group)

MCF offers several compelling advantages in this context:

-

Massive Bandwidth Density: By carrying multiple spatial channels within a single fiber cladding, Multi‑Core Fiber (MCF) dramatically increases bandwidth per cable and per connector. For example, a single 4‑core MCF can replace four parallel single‑mode fibers typically used in a 400 GbE breakout (4×100 Gb/s) or even an 800 GbE link (4×200 Gb/s), reducing reliance on bulky multi‑fiber ribbons. In a demonstration by OFS, a single 125 µm‑cladding 8‑core fiber successfully supported 8×100 Gb/s = 800 Gb/s transmission over 2 km — performance that otherwise requires eight discrete SMFs in a typical DR8 or PSM4 setup [6]. Thus, MCF offers much higher fiber density inside conduit and data center cable trays, significantly simplifying infrastructure deployment. As link counts scale into the hundreds or thousands, this reduction in cable bulk becomes critical. MCF enables more compact paths between data centers or through congested underground ducts. While even higher core‑count fibers (7‑core, 12‑core) could further boost capacity, most intra‑DC deployments find 4–8 cores per fiber to be the practical sweet spot [6].

-

Reduced Fiber Footprint and Easier Management: Fewer physical fibers are needed for a given aggregate capacity. This translates to fewer connectors, fewer patch cords, and fewer terminations to handle. For network operators, that means less bulk to manage and maintain. A simple example: replacing four LC patch cords with a single 4-core MCF cable yields a 75% reduction in fiber count (and similar reduction in space occupied) [4]. Over an entire facility with, say, 10,000 internal links, the fiber count reduction is enormous. As Table 1 showed, the MCF approach consolidates multiple light paths in one strand, which simplifies cable routing and could reduce the size of cable bundles by a factor equal to the core count. Technicians would no longer need to install and label as many individual fibers; instead, they manage one fiber carrying multiple channels. This can improve reliability as well – fewer physical connections mean fewer points of failure. (It’s worth noting that losing one MCF could drop multiple channels at once, but appropriate redundancy in network topology can compensate for that, similar to how fiber trunk failures are handled today.) Industry experts expect the first applications of SDM fibers to be in data centers precisely because of these manageability and density benefits, where “simply increasing the number of optical fiber links may be too costly and unmanageable” and SDM provides a more scalable alternative [6, 8].

-

Cost Efficiency – Scaling Bandwidth without Linear Cost Increase: In the long run, MCF has the potential to lower the cost per Gb/s of transmission. Initially, MCF fibers and connectors are more expensive than commodity SMF, due to more complex fabrication and lower production volume. However, there are intrinsic cost advantages when scaling to many cores. The fiber raw material (glass preform) cost per core drops substantially – a 4-core MCF, for example, uses only marginally more glass than an SMF, so material cost per fiber core is ~25% of a single fiber’s. The main added cost is in processing (making the preform with multiple cores, ensuring quality, etc.). Fujikura notes that by increasing preform size (to draw longer fiber from one multi-core preform) and optimizing manufacturing, they can sharply reduce the processing cost per core. With ongoing R&D, volume production of standard-diameter MCF is becoming feasible – Fujikura recently drew a 600 km-long spool of 4-core fiber from one preform, a world record demonstrating manufacturing maturity [12]. From a system perspective, using MCF can also cut costs in other ways: fewer fibers to install (labor savings), fewer connectors and patch panels, and potentially consolidation of transceivers. For instance, rather than using four separate optical modules for four fibers, one could use a single module that interfaces with an MCF and carries four channels. This integration (one multi-channel module vs. four separate modules) could yield cost savings in packaging and power. Today’s transceivers are often limited by faceplate density – MCF allows fewer physical ports to achieve the same throughput, delaying or avoiding costly upgrades to new form- factors or more expensive higher-speed optics. In summary, while MCF components carry a premium initially, the cost per Gb/s is expected to drop below that of deploying equivalent parallel fibers, as the technology scales and standardizes.

-

Power Efficiency and “Green” Scaling: Multi-core fibers can also confer power savings in certain scenarios. This is somewhat subtle for short links (since no optical amplification is used intra-data- center), but the consolidation of optics can reduce overhead power. If four channels go through one module instead of four, you eliminate redundant control circuitry, and potentially share components like lasers. For example, researchers have explored using one laser source feeding multiple modulators for different cores in externally modulated systems, or using a single multi-core driver IC. Similarly, by sharing TEC (thermoelectric cooling) devices across multiple cores within transceivers or repeaters, additional power savings can be achieved. Additionally, optical power requirements per core are lower to achieve a given total throughput when using parallel cores. In long-haul systems, this is dramatic – splitting power across cores avoids nonlinear Shannon-limit penalties in one core [7], thus improving bits per watt. In data centers, the nonlinearity isn’t a limiting factor, but there is still an advantage: multi-core fan-out devices have shown very low loss (on the order of 0.2–0.5 dB total for distributing signals to cores), so nearly all launched power reaches the receivers across the cores. If we replaced those cores with separate fibers and connectors, the total insertion loss could be higher (each fiber has connector loss, etc.), which might require higher laser output or more amplification. Moreover, an MCF link can sometimes allow streamlining the network topology – for instance, using one fiber to directly connect two multi-port devices (through a multi-core connector) where otherwise multiple parallel links and perhaps extra intermediate switches would be needed. This can reduce the active equipment and thus electrical power consumption. Finally, high-core-count fibers could enable new architectures like optical circuit switching fabrics that cut down on energy usage by dynamically reconfiguring lightpaths; having many cores in one fiber simplifies such fabric connectivity. The EXAT consortium specifically highlights energy savings with SDM as a benefit, expecting integrated MCF systems to yield space and power efficiencies in densely scaled networks [7].

-

High Bandwidth Scaling for AI and HPC: Modern AI training clusters (sometimes called “AI supercomputers”) require all-to-all high-bandwidth connectivity between accelerator nodes. This puts tremendous stress on the data center network, often necessitating multiple tiers of switches and enormous cable counts. MCF provides a way to scale these fabrics with minimal disruption to existing optics. A set of standard 100 Gb/s or 200 Gb/s optical lanes can be grouped into one fiber to create a multi-terabit link, without resorting to exotic new modulation or >400 Gb/s single-channel transceivers. For example, using 8-core fibers, it is conceivable to achieve 8×100 Gb/s = 800 Gb/s on a single fiber without any electrical multiplexing – something impossible on one SMF without either running 8 wavelengths (which would need expensive WDM lasers) or moving to a new 800G serial standard. In fact, a 1.6 Tb/s short-reach link was demonstrated using a 4-core MCF carrying four wavelengths (400 Gb/s per wavelength using 56 Gbaud PAM4) [6]. This showcases how MCF can facilitate terabit-scale connections by combining readily achievable per-channel rates. The business implication is clear: data center operators can meet scaling demands by adopting MCF links, leveraging existing Ethernet optics technology on multiple cores, rather than waiting for ultra- complex single-channel solutions. This is a parallelism approach to scaling, much like multi-core CPUs increased processing power in computing. Crucially, it’s done in the optical domain, where it is far more cost-effective and energy-efficient than aggregating channels electronically.

-

Direct Integration with Silicon Photonics: An often overlooked but significant advantage of MCF is how it meshes with emerging silicon photonic (SiPh) interfaces. Silicon photonics allows integration of many optical transmitters and receivers onto chips (or chiplets) that can be co-packaged with switches or mounted near CPUs/GPUs. These photonic chips naturally produce multiple optical lanes (arrays of modulators, waveguides, etc.). MCF is an ideal companion, as a single fiber can carry all those lanes at once. Instead of having, say, 8 separate fiber pigtails coming out of a co-packaged optics module (which poses a routing nightmare on a dense PCB), one could have just 1 or 2 MCFs carrying all the channels. In essence, MCF provides a high-density optical I/O that matches the high-density electrical I/O of advanced chips. Researchers have explicitly pointed out that linear- array MCF designs (e.g. a 2×4 core arrangement) can map directly to transceiver arrays on PICs (photonic integrated circuits) [6]. By using an MCF with cores laid out to mirror the spacing of on-chip lasers or photodiodes, one can couple the chip to the fiber with potentially simpler optics. This greatly simplifies packaging for next-generation transceivers and could enable pluggable or onboard modules where a single connector carries multiple high-speed channels. In a 2019 paper presented at OFC (Optical Fiber Communication Conference), the world’s largest conference for optical networking and communications, OFS scientists noted that MCF is well-suited for optical interconnects in data centers, where there is no need to address multicore amplification and that MCF systems can provide direct connectivity to silicon photonic (SiP) and InP chips for high-degree integration and high-density interconnects [6]. This means MCF can eliminate the intermediate fan-outs in some cases – the fiber itself plugs into a transceiver that has integrated multi-core coupling optics, making the entire link very streamlined. The payoff for the data center is not just more bandwidth, but also potentially simpler network assembly (fewer cables to plug) and lower loss interfaces, which circles back to power and cost benefits.

In summary, within data centers MCF enables much greater bandwidth per fiber run, yielding a leaner physical network that is easier to scale. It leverages parallelism in optics to meet demand growth in a way that’s compatible with existing infrastructure: MCF can be deployed using the same rack space and similar handling as normal fiber (especially with standard-diameter variants), but delivers multi-fiber capacity over a single strand. This is why many experts believe the first real-world adoptions of MCF will occur inside large data centers [3, 6, 14] – the environment is controlled (short, no amplifiers needed), and the immediate rewards (space, weight, and management savings) are significant for the operators.

5. Technical Challenges and Solutions for MCF Deployment

As promising as MCF is, its deployment comes with technical challenges distinct from traditional fiber. Here we address the main concerns — crosstalk, fan-in/fan-out coupling, splicing/connectors, and fiber management — and summarize the solutions and progress in each area. A fair, balanced view is given, noting which challenges have been largely solved and which are still being refined.

5.1 Inter-Core Crosstalk:

Because multiple cores share the same fiber cladding, some light can bleed between cores over long distances, potentially causing channel interference. Crosstalk (XT) is highly dependent on fiber design — such as core spacing, refractive index profile, and cladding geometry — as well as on transmission length and wavelength. In early MCF experiments over a decade ago, inter-core crosstalk was a major concern, particularly in long-haul transmission systems where even weak coupling can accumulate over hundreds of kilometers. In contrast, for short-reach applications like intra-data center links, crosstalk is inherently less problematic. The short distances involved leave little opportunity for significant power exchange between adjacent cores, even when moderate coupling exists. In recent years, significant progress has been made in suppressing crosstalk. Optimized core layouts and increased core pitch further contribute to reducing inter-core interaction. Experimental studies have demonstrated that properly designed trench-assisted MCFs can achieve inter-core crosstalk levels below –60 dB/km under controlled conditions [15]. Even at 1550 nm, where coupling tends to be stronger due to increased mode field diameter, short links (<100 m) can maintain crosstalk well below levels that would impact system performance. Indeed, multiple studies confirm that crosstalk in uncoupled MCF is not severe over the short link spans inside a data center [6]. If necessary, system designers can also mitigate any residual XT by channel assignment – e.g. not using adjacent cores for high-power channels, or leaving a core dark as a guard (though this sacrifices some capacity and has generally not been needed in recent designs). It’s also worth distinguishing between uncoupled MCF (each core essentially isolated except for tiny leakage) and coupled-core MCF (where cores are intentionally close and strongly coupled to use MIMO techniques). The latter is a research topic for spectral efficiency, but for data center use the uncoupled approach is preferred – simpler, no MIMO DSP required, and crosstalk engineered to be ultra- low. Real-world deployment experience is also accumulating: in a field trial in L’Aquila, Italy, 4-core and 8-core fibers were deployed in tunnels, and it was found that cabling did not appreciably increase crosstalk, and the cores remained stable and well-isolated in practice [2]. In summary, through proper fiber design, inter-core XT can be kept below the noise floor for the short distances of intra-data center links. Ongoing innovations, like heterogeneous core layouts (varying core sizes or index) and concentric ring core arrangements, further allow high core counts with controlled XT [3]. Network planners can be confident that crosstalk will not significantly impact error rates or require complex mitigation for properly designed MCFs in the data center environment.

5.2 Fan-In/Fan-Out (FIFO) Coupling:

One of the most critical aspects of using MCF is getting signals in and out of the multiple cores. Standard transceivers emit and receive on single-core pigtails (SMF or MMF). To interface these to an MCF, a fan-out device is used: essentially an optical combiner/splitter that takes N single fibers on one side and an N-core fiber on the other. Early in MCF development, this was a major challenge – how to do this with low loss and low crosstalk, and without an enormous bulky apparatus. Fortunately, considerable progress has been made. The simplest solution, fiber bundle fan-outs, have proved very effective. This approach uses a tiny array of single-mode fibers meticulously aligned to each core of the MCF and then tapered/fused or held in a precision ferrule. Coupling losses below 0.2 dB have been achieved in 7-core fiber fan-outs [6] - meaning virtually all the light from each small fiber goes into the corresponding MCF core. Crosstalk within such a fan-out was measured <–65 dB [6], so it doesn’t degrade the link performance. Researchers even developed compact MU connector-type fan-outs (MU is a small form-factor connector) with insertion loss <0.32 dB using fiber bundles [6]. These can serve as pluggable “pigtail” converters between MCF and conventional fiber.

However, using a separate fan-out component for every MCF link may add cost and complexity if done discretely. For intra-rack connections, one could envision a cleaner approach: directly connecting an MCF to a multi-channel transceiver. To this end, recent prototypes have shown integrated receptacles where a photonic module has an MCF connector built-in. One reported design used a 4-core MCF aligned to a 4-channel 1310 nm laser array within a pluggable QSFP-style module [6]. Essentially, the fan-out was internal: a fiber bundle inside the transceiver coupled the lasers/PDs to the 4-core fiber receptacle. This kind of solution is promising for commercialization – it hides the complexity from the user (the module just has an MCF port) and ensures everything is aligned from factory assembly. Looking further ahead, the holy grail is to eliminate the fan-out entirely by coupling the fiber cores directly to on-chip waveguides. This has been demonstrated in labs: for example, using 2D grating couplers or butt-coupling from a photonic chip into a multi-core fiber face. Such fully integrated MCF interfaces would be most cost-effective and scalable, as they remove one coupling stage and can be made in volume with lithographic precision. The trade-off is that it requires co-design of fibers and chips (ensuring core spacing matches waveguide spacing, etc.). Given the active research, it’s likely we will see integrated multi-core fiber connectivity in future switch and router products – especially if co-packaged optics becomes mainstream, since one could mount an MCF connector directly on the package to carry all lanes.

In summary, fan-in/fan-out technology is no longer a show-stopper. The basic devices work well (sub-1 dB loss for connecting MCF to SMF is routinely achieved [4, 6]), and they are getting smaller and more integrated. For initial deployments, one might use small form-factor bundle fan-outs or multi-core patch cords to connect between legacy gear and MCF links. As ecosystem support grows, we’ll see MCF-compatible transceivers that make the coupling transparent to the end user. It is worth noting that aligning multiple fibers does introduce some complexity in manufacturing – but companies like NTT, Sumitomo Electric, and Fujikura have all developed precise MCF connector assemblies and even MPO-style connectors for multi-core fibers [13]. The existence of these components, and their use in field trials, shows that the industry is solving the puzzle of “how do we plug in an MCF”. Indeed, a recent Fujikura paper presented connection technologies for 100 μm cladding 4-core MCF, demonstrating prototype fan-in/out and even LC connectors adapted for that thinner MCF, achieving average insertion loss ~0.57 dB and return loss >56 dB which met standard connector specs. They concluded that even a 100 μm MCF can have “equivalent performance potential for connectivity” as normal fibers [4]. This kind of result gives confidence that standard-like connectivity for MCF is feasible.

5.3 Splicing and Field Handling:

Joining multi-core fibers together (or to pigtails) via fusion splicing is another challenge that has seen steady progress. A fusion splicer for MCF must align not only the fiber cores in lateral position but also the rotational angle so that core patterns match up. Early on, splicing MCF relied on painstaking manual alignment or image recognition of core traces. Now, specialized MCF fusion splicers have been developed – for example, Fujikura’s FSM-100P is a commercial splicer that supports multi-core fiber splicing [1]. These splicers use multi-axis cameras to locate the cores (or a built-in marker in the fiber) and then perform automated rotational alignment before fusing. Reported splice losses for 4-core fiber are impressively low: on the order of 0.1–0.2 dB (similar to single-fiber splices) for each core at 1310 nm [13]. The presence of a marker rod or patterned cladding in the fiber (used by manufacturers like Fujikura and the University of Bath for their multi-core HCF [11]) helps identify orientation. In essence, the splicer “sees” the core layout and rotates the fiber until, say, the marker is in a known position, then aligns and splices. Field tests have proven that with these tools, even installation technicians can splice MCF in similar time to a normal fiber once Trained. NTT in fact developed a whole “lineup” of on-site construction and maintenance technologies for 4-core fiber, anticipating commercial introduction – including portable splicers, test equipment for each core, and procedures for troubleshooting multi-core links [16]. So, while splicing MCF is inherently more complex, the industry has prepared to support it operationally. The need for splicing in data centers might actually be limited (many links are patch-cord based within rooms), but for longer runs or permanent trunk cables, splicing will be used. We now have confidence that multi-core fusion splices can deliver low-loss, low-reflection joints comparable to single-core fiber splices, with the proper equipment.

For connectors, as discussed, new ferrule designs allow multi-core termination. One approach is to use MT ferrules (like those in MPO connectors) to hold multiple multi-core fibers. Another is to use a modified LC/ SC ferrule that has a slightly larger bore to precisely center a multi-core fiber and keying to maintain orientation. Connectors have been made for 4-core fibers where two keyed LC connectors mate a pair of 4- core fibers core-to-core. Work is ongoing to standardize such connectors so that vendors can provide interoperable solutions. In the interim, many MCF deployments will likely use pigtails (MCF pre-terminated to single-core breakouts or transceivers) to avoid manual field termination. This is similar to how ribbon fiber cables are often terminated – by splicing a factory-made MPO fan-out. The bottom line is that connection and splicing issues, while non-trivial, have been largely addressed by advanced tools. In practical terms, a data center using MCF would deploy pre-terminated cables or use trained staff with the new splicers for any field joints. These operational considerations are being ironed out now that MCF is entering commercial trials.

5.3 Fiber Management and Network Design:

Introducing MCF into a network means we must manage not only fibers but also the channels within each fiber. Each core in an MCF is like a separate link that could potentially go to a different endpoint (though often an MCF’s cores will be used as a group between two locations). This raises questions in fiber management databases, patch panel labeling, etc. The industry will need conventions for identifying cores (e.g. numbering them 1–4, or using colored markers on connectors). In factory cables, one can color-code cores via slight differences in doping that are visible under a microscope, or simply rely on documentation since the core layout is fixed (for example, in a 7-core hexagon, one might number them with the center as 1 and outer cores 2–7 clockwise). Routing and patching with MCF could be handled in a couple of ways: either always keep cores together (patch panel ports correspond to entire fibers, not individual cores, maintaining one fiber as a unit of connectivity), or have the ability to break out cores at patch panels to different destinations. The former is simpler and likely for initial deployments – e.g. use a 4-core fiber as a “high-capacity trunk” between two specific switches. The latter scenario (dynamic core-level cross-connects) would require core selectors or multi-core fiber patch panels that internally fan out cores to separate connectors. While possible, it might complicate things and isn’t necessary to realize MCF’s main benefits. Most data centers will likely treat an MCF like a high-capacity pipe between fixed endpoints, at least in the near term. This is analogous to how ribbon fiber cables are often used: one doesn’t usually reconfigure which sub-fiber goes where; the whole ribbon goes to a matching ribbon at the far end.

On the positive side, using MCF dramatically reduces the number of individual fibers to manage, as discussed. This means fewer cable tags, less risk of congestion in pathways, and potentially cleaner rack cabling. An interesting challenge is monitoring and testing MCF links. Each core must be tested for continuity and loss – equipment vendors have developed multi-channel OTDRs and light sources that can inject signals into each core sequentially, often by using a fan-out device in the test gear. Similarly, inspection microscopes for connectors need to handle multi-core ferrules (ensuring all cores are clean and aligned). These are manageable with updated procedures and some new adapter tips. NICT’s field experiments have shown that once installed, multi-core fibers can be very stable over time (e.g. core skew and phase remained stable in a tunnel over months) [2], which bodes well for maintenance – they don’t introduce unpredictable impairment.

From a network design perspective, one must consider protection and redundancy. If an MCF carries, say, 4 critical links in one fiber, a single physical damage (fiber cut) could knock all 4 out. Network architects should plan redundancy such that not all parallel paths share the same fiber. This is similar to diversifying fiber routes today. It may actually be easier to provide redundancy because MCF saves so much fiber count that using a second parallel MCF for protection is not an undue cost. For instance, instead of eight separate fibers (four primary, four backup), one could deploy two 4-core MCFs and distribute core usage such that each carries half the primaries and half the backups, providing resiliency if one fiber fails. There is flexibility in how to assign traffic to cores that can be leveraged for high availability.

From a network design perspective, one must consider protection and redundancy. If an MCF carries, say, 4 critical links in one fiber, a single physical damage (fiber cut) could knock all 4 out. Network architects should plan redundancy such that not all parallel paths share the same fiber. This is similar to diversifying fiber routes today. It may actually be easier to provide redundancy because MCF saves so much fiber count that using a second parallel MCF for protection is not an undue cost. For instance, instead of eight separate fibers (four primary, four backup), one could deploy two 4-core MCFs and distribute core usage such that each carries half the primaries and half the backups, providing resiliency if one fiber fails. There is flexibility in how to assign traffic to cores that can be leveraged for high availability.

6. MCF for Inter-Data Center Connectivity (Metro/Regional)

While the primary focus is on intra-data center use, multi-core fiber can also deliver benefits in connecting data centers to each other, whether across a campus or across longer distances (metro fiber routes). Hyperscalers often build campus networks linking multiple warehouse-sized data centers in proximity (within a few kilometers). These links carry enormous aggregate traffic (e.g. for redundancy between sites or splitting workloads) and are typically implemented with many parallel fibers or a few high-fiber-count cables. MCF is very attractive here: it can multiply capacity per cable and ease duct congestion. For instance, two nearby data centers could be connected with a handful of 8-core fibers instead of dozens of standard fibers, potentially fitting within a single small conduit. The short distance means no optical amplification is needed; one can run the same transceivers as inside the data center. In fact, any link that is basically an “extended patch cord” (a few kilometers of fiber) is an ideal candidate for early MCF adoption, because it doesn’t depend on new amplifier technology or changes in the optical layer beyond the fiber itself.

Figure 4. Example of different data center interconnect (DCI) links. (Image source: EFFECT Photonics)

For somewhat longer links (tens of km, intra-city), MCF can still be used but with some additional considerations. If the span exceeds the reach of Grey optics (~10 km) or CWDM/DWDM light (40–80 km), one would traditionally use amplifiers. Multi-core optical amplifiers (e.g. multi-core EDFAs) have been a research topic – essentially a single amplifier unit that boosts all cores simultaneously using a common pump laser, which can improve integration and efficiency. There have been successful demonstrations of such devices (e.g. a multi-core EDFA that amplifies 7 cores uniformly). However, these are not yet commercial off-the- shelf products. In the interim, an MCF could be broken out into individual cores at an amplifier site, run through parallel single-core EDFAs (one per core), then recombined into an MCF. This is obviously more complex than a single-core system. Thus, the early deployments of MCF for inter-data center likely will stick to distances that can be covered without repeaters – say up to ~40 km (which modern coherent or advanced direct-detect optics can often handle). Luckily, many data center interconnect (DCI) needs fall in this range (connecting campuses within a metro area).

One notable development is that the first submarine cable using MCF is now planned: the Google-led “TPU” (Taiwan-Philippines-U.S.) cable will reportedly deploy multi-core fiber, marking the first commercial MCF deployment in a long-haul system [18]. This undersea use highlights MCF’s advantage of maintaining high capacity in a limited cable size (undersea cables are very space and power-constrained). The fact that companies are willing to trust MCF in a critical subsea route by 2023–2024 [18] underscores its growing maturity. For terrestrial data center links, this means by the time one is considering MCF for metro, the technology will have been proven in even harsher long-distance environments. Indeed, NTT and NEC have successfully trialed a 12-core fiber over 7,200 km with repeaters [17], showing that even in extreme scenarios MCF can perform.

For inter-building connectivity on a campus, one can deploy MCF today much like any other fiber cable, with the benefit that one MCF cable might carry 4× or 7× the capacity of a standard cable of the same diameter. The field-deployed testbed in Italy showed that standard loose-tube cables can house MCFs alongside regular fibers without issue [2]. Installers noted no significant differences in pulling the cable, splicing it, etc., aside from the need to use the right splicing programs. Performance in the field was excellent, with no in-situ increase in loss or crosstalk after deployment [2]. One interesting finding was that crosstalk in uncoupled MCF did not change after installation (meaning the cabling and laying process didn’t induce more coupling) [2]. This gives confidence that deploying MCF in real ducts or trenches will not introduce unforeseen penalties – they behave as expected

MCF can also simplify running parallel links between sites. Suppose two data centers exchange 16 fiber pairs of traffic. That could be accomplished by 4 pieces of 4-core MCF (each carrying 4 fiber pairs worth, if using 2 cores for Tx/Rx per link) instead of 16 separate fiber pairs. Fewer fibers to route between buildings means potentially higher reliability and easier upgradability (one could initially light 2 cores and later light more as needed, providing graceful capacity growth). Because the attenuation of each core is as low as standard fiber, there is no distance compromise – if SMF could do it, MCF can as well. The main requirement is to have the coupling devices at the ends, which, as covered, are becoming readily available.

In summary, for inter-data center and campus connectivity, MCF extends its advantages of capacity density and space/power efficiency beyond a single building. It allows massive trunk groups to be collapsed into a handful of fibers, which is valuable in metro fiber ducts that are often congested. By reducing cable count, MCF can also reduce leasing costs in shared conduits (if lease is per cable) and make pulling new capacity easier (smaller cables can be pulled through occupied ducts). The technical hurdle of amplification for >50 km links remains, but ongoing R&D in multi-core amplifiers is likely to solve that in the coming years, unlocking MCF for even longer hauls. In the interim, expect to see MCF used in metro DCI for shorter hops and possibly as part of bundled solutions (where a vendor provides transceivers with integrated fan-outs for, say, a 4-core DCI link). Given the strong push by companies like Microsoft and others on hollow-core for latency, we might see a scenario where hollow-core fiber is used for ultra-low-latency routes and multi-core fiber is used for ultra-high-capacity routes – each finding its niche in the interconnect toolkit [19]. It’s an exciting possibility that both advanced fiber types will complement each other in next-gen data center networks.

7. Business Impact and Value Proposition

The technical analysis above highlights that multi-core fiber can deliver more capacity in less space, with manageable complexity. Here we distill how those technical merits translate to business benefits for data center operators and investors evaluating MCF-based solutions:

-

Dramatically Increased Bandwidth Density: For a given physical cable infrastructure, MCF allows far more traffic to flow. This effectively sweats the assets of fiber plant much harder. In a large data center, fiber cabling is part of the capital expenditure – deploying MCF means that the same number (or fewer) of cables can handle future traffic growth that would otherwise require many additional runs. For new builds, this can reduce the amount of material and labor needed for cabling (lower CAPEX on cabling). For existing facilities, it means one can scale up port speeds and counts without filling up all available conduits. As an example, using 8-core fibers in lieu of SMFs provides on the order of 8× the bandwidth per cable; thus an operator can upgrade to, say, 800G or 1.6T interconnects by swapping in MCF links, rather than installing 8× more parallel fibers. The value is that high-capacity upgrades can happen within existing infrastructure limits, prolonging the life of the physical cable plant and deferring costly overhauls (like adding new cable trays or cutting new duct pathways). This is very attractive in multi-tenant data centers or older facilities where adding fiber is disruptive. Essentially, MCF offers a capacity multiplier that protects infrastructure investments – a single MCF could carry, for instance, 16 lambdas across 4 cores (which one could view as equivalent to a bundle of 16 fibers) [6]. Fewer fibers carrying more throughput also simplifies DCIM (data center infrastructure management), as there are fewer physical links to document and monitor.

-

Footprint and Space Savings Drive Lower Operational Costs: Space in racks and cable raceways has a cost. By consolidating fibers, MCF can free up rack units (fewer large patch panels needed) and reduce under-floor or overhead cable bulk that can impede airflow. Better airflow can translate to cooling energy savings – big cable bundles sometimes act like “air dams” under raised floors, so leaner cabling is greener. Also, lighter cable loads can be installed faster and with less risk. Quantitatively, if a data center can reduce cabling volume by 50–75%, it might be able to delay expansion or fit more revenue-generating equipment in the same space. VAFC’s analysis shows that replacing legacy bundle cables with MCF in an AI cluster network could reduce cable tray fill by an estimated 60%, easing maintenance access and potentially allowing higher density of hardware in pods without cabling congestion. These are operational efficiencies that improve the facility’s ability to handle growth smoothly. From an investor perspective, this means better utilization of the white space and potentially lower OPEX (operations expense) for things like cooling and cable management labor.

-

Scaling Without Linearly Scaling Cost: One of the biggest advantages comes when considering future scale. Normally, doubling network capacity might double the number of transceivers, fibers, and ports – roughly doubling cost. With MCF, doubling capacity could be as simple as lighting additional cores on fibers already in place, or using the same number of transceivers but ones that support multi-core outputs. For example, instead of using eight 100G modules and eight fibers, an operator could use two 4-core 400G modules and two fibers to achieve 800G total. The cost of those two modules might be less than eight lower-speed ones (due to integration efficiencies), and managing 2 fibers is clearly cheaper than 8. In effect, MCF bends the cost curve, especially as the technology matures. Early on, the premium for multi-core optics might offset some savings, but as standards emerge (for instance, a future IEEE Ethernet standard could directly support parallel cores similar to how parallel lanes are supported now), economies of scale will kick in. The raw material cost per bit of MCF is inherently lower: raw glass per core is 75% cheaper. When manufacturing catches up, the selling price of multi-core fiber could approach that of single-core fiber on a per-core basis. Similarly, connector costs get amortized – one connector mating for N cores vs. one per core currently. That means fewer components to buy and maintain overall. The long-term impact is that network upgrades might involve fewer physical items (fibers, connectors, etc.), focusing cost on more advanced but consolidated optics. This likely shifts spend from infrastructure to equipment, where spend yields more value (optical bandwidth) rather than “just more glass”. For cloud companies, that translates to potentially lower cost of bandwidth ($/ Gb/s) delivered to their end-users, improving margins or enabling more competitive pricing.

-

Power Savings and Sustainability: Although optical fiber itself doesn’t consume power, the endpoints do. If MCF allows consolidation of transceivers, there can be power savings. For instance, one multi-core transceiver handling 4 channels might consume less power than four separate transceivers – because it can share overhead functions (control ICs, drivers, TEC cooling if any, etc.) and possibly use more efficient integrated photonics. Furthermore, if fewer fibers are needed, we might reduce the need for certain amplification or signal conditioning equipment. On a network level, MCF could enable simplified topologies (for example, one high-core-count fiber from Leaf to Spine could bypass intermediate patching or multiplexing devices). Every eliminated piece of active equipment saves power. Additionally, multi-core amplifiers (in future long links) would share pump lasers for multiple cores, which is more power-efficient than separate amplifiers per fiber [10]. In a holistic sense, MCF contributes to a greener network by transmitting more data per watt expended in the optical layer. Microsoft’s interest in hollow-core fiber was partly about latency and security, but they also note HCF’s potential to use broader spectrum with fewer nonlinear penalties [9] – similarly, MCF’s parallelism avoids pushing single channels to the nonlinear regime, which can reduce the need to overpower signals. While exact numbers depend on implementation, one can envision 10-20% transceiver power reduction when using multi-core optics versus equivalent separate optics, and even higher gains in systems with shared amplification. For large operators, even single-digit percentage improvements in optical efficiency can save millions in energy costs and help meet sustainability targets. MCF thus aligns with ESG (environmental, social, governance) goals by enabling capacity growth without linearly growing energy usage.

-

Competitive Differentiation and Future-Proofing: Adopting multi-core fiber early can give cloud providers and data center companies a competitive edge. It enables them to handle AI-era traffic surges and offer high-bandwidth connectivity (e.g. between availability zones, or for customer HPC interconnect services) that others might struggle to provide with limited fiber. It’s a way to stay ahead of the demand curve. From an investor standpoint, a company that has MCF in its technology arsenal is potentially positioned to capture new markets (for instance, offering “optical fabrics” for AI-as-a-service with guaranteed high throughput). It can also defer costly overbuilds – maximizing current facility capacity is often more cost-effective than building new facilities. MCF’s high core- count versions (like 12-core fibers) provide a roadmap to extreme scaling (the EXAT roadmap talks of “dense SDM” beyond 30× channels [7]). Even if a 12-core isn’t needed today in a single DC link, knowing that the path exists to go 2×, 4×, 8× beyond current single-fiber limits is reassuring. It essentially “future-proofs” the fiber plant. One can deploy a moderate MCF now (say 4 cores) and in future perhaps replace or augment it with higher core count fiber as standards evolve, reusing a lot of the same infrastructure. Compare that to single-core fiber – it’s already at the physical nonlinearity limit for capacity per core (around 100 Tb/s as discussed, unless new spectral bands or novel modes are used) [7]. MCF breaks that ceiling by adding spatial channels. This gives a long runway for growth.

-

Market and Ecosystem Maturity: It’s important for stakeholders to know that MCF is not just a lab curiosity. The ecosystem is quickly coalescing: international standards bodies (like ITU and IEC) have active work on MCF specifications and test methods, major vendors are prototyping hardware, and early adopters (including telecom operators in Japan and big cloud players) are performing trials. For example, Sumitomo Electric’s 2-core fiber product entered mass production and was shortlisted for an industry award in 2024 [20], and OFS has shown its own MCF offerings [10] – indicating multiple suppliers entering the fray. Microsoft’s high-profile acquisition of Lumenisity for hollow-core fiber shows top-tier interest in advanced fiber; it’s reasonable to expect similarly strong interest in multi-core fiber for capacity (indeed, many of the same companies are researching both). Analysts predict that as AI networking booms, solutions like MCF will see accelerated adoption to avoid bottlenecks in data movement. An investor reading this report can take away that MCF is a timely opportunity, not a far-future concept. The timeline for meaningful deployments is the next 1–3 years for inside data centers (given successful trials like the ones at NICT/EXAT and the standard-diameter 4-core fibers now available [4]). This means companies investing in or adopting MCF technology now could realize benefits and possibly market differentiation by the mid-2020s, which aligns with the anticipated ramp-up of AI and edge computing services that demand such high-bandwidth fabrics.

In summary, the business case for multi-core fiber in hyperscale and AI data centers is compelling: greater performance and capacity headroom, achieved with lower relative cost growth and operational complexity. It turns the challenge of exponential data growth from a fiber proliferation problem into a manageable engineering upgrade. By deploying MCF, data center operators can significantly improve their bandwidth supply density (Tb/s per m³ of space, or per cable), reduce the physical and ecological footprint of their networks, and ensure they can meet customer demands for massive interconnectivity (such as those between machine learning clusters or data analytics farms) without constantly reinventing the wheel. From an investor viewpoint, backing companies that leverage such forward-looking infrastructure can be attractive because it demonstrates both technological leadership and a practical approach to scaling costs. MCF’s strong value proposition – essentially doing more with less – resonates well in an industry that is expected to do exactly that in the coming decade.

8. Conclusion

Multi-core fiber technology represents a significant leap forward in data center optical infrastructure, offering a fair and balanced solution to the pressing scaling challenges in hyperscale and AI networking. In this whitepaper, co-authored by VAFC Global with insights from NICT, EXAT, Fujikura, Sumitomo Electric and others, we have analyzed how MCF compares with single-core fiber, hollow-core fiber, and other SDM approaches. The evidence shows that MCF can deliver significant transmission capacity gains and bandwidth density improvements – on the order of N-fold increases with N-core fibers – while maintaining comparable latency, reliability, and reach as traditional fiber for the distances of interest. Unlike exotic solutions that address only one metric (for example, hollow-core for latency or multi- mode for short-term cost), MCF provides a holistic upgrade: it boosts capacity and density tremendously with only modest changes to existing fiber handling practices.

We examined the technical hurdles of crosstalk, coupling, splicing, and fiber management, and found that none are insurmountable. In fact, industry advancements have largely mitigated these issues. Modern MCF designs have crosstalk so low that short data center links operate as if each core were completely independent [6]. Fan-in/fan-out devices and integrated connectors are performing with losses well within acceptable ranges [4, 6], enabling efficient integration of MCF into current systems. Fusion splicers and test tools tailored for MCF are available, facilitating deployment and maintenance to approach the convenience of normal fiber [13]. These developments reflect a maturing ecosystem ready to support early adopters.

The advantages of MCF for hyperscale and AI data centers are clear. By increasing bandwidth per fiber and per connector, MCF enables networks to scale capacity within the same physical footprint, which is a significant advance as facilities strive to pack more compute and communication into limited space. The business benefits – higher throughput, lower cost per bit, reduced floor space, and potentially lower power per Gb/s – flow directly from these technical gains. In an era where AI training clusters with multi-petabyte datasets are becoming commonplace, having an optical fabric that can keep up without a proportional explosion in complexity is a decisive strategic benefit. MCF provides exactly that: more performance for essentially the same physical complexity, turning a looming scaling problem into a manageable evolution.

It is also worth noting that MCF and hollow-core fiber are complementary, not mutually exclusive. As highlighted, HCF will likely be leveraged for latency-sensitive links (where its ~30-50% speed advantage is crucial [10]), and MCF for capacity-intensive links (where its multi-core parallelism shines). Data centers handling AI and real-time workloads may well deploy both: HCF for ultra-low latency and ultra-high power optical transmission between certain nodes (or edge locations) and MCF for bulk data movement and inter-cluster communication. This future showcases an advanced optical fabric that is adaptive to specific needs – something that just a few years ago might have sounded far-fetched but is now within reach due to rapid progress in fiber technologies

In conclusion, multi-core fiber offers a strong value proposition grounded in sound engineering and demonstrated results. It enables hyperscale and AI data center operators to gracefully scale their networks in capacity and efficiency, staying ahead of demand curves. Moreover, MCF is emerging as an essential enabler for the migration toward advanced communications infrastructure in hyperscale environments, supporting the transition to next-generation network architectures. While traditional single-core fibers will continue to play a role, MCF offers substantial value that cannot be overlooked in forward-looking network planning. The first commercial deployments and standardization efforts underway in 2024–2025 mark the inflection point where MCF moves from experimental to practical. Investors should take confidence that backing MCF development or early adoption is likely to yield dividends as the industry coalesces around this next-gen fiber solution. Customers (cloud service providers, enterprises, HPC centers) evaluating their future data center expansions should consider pilot deployments of MCF to familiarize themselves with its capabilities and integration. Those who do so stand to gain a competitive edge in providing high-bandwidth, scalable, and cost-effective services.

Ultimately, just as wavelength-division multiplexing (WDM) revolutionized fiber capacity in past decades, space-division multiplexing via multi-core fiber is poised to play a pivotal role in data center and communications networks. With multi-core fiber, the industry can continue to grow in step with insatiable AI and cloud data demands, delivering on the promise of hyperscale computing without hyperscale complexity. VAFC Global and its partners in this paper are confident that multi-core fiber will become a mainstay of high-performance data center interconnects, and we recommend stakeholders to engage with this technology early to fully realize its benefits.

References

[1] T. Oda et al., “Loss performance of field-deployed high-density 1152-channel link constructed with 4-core multicore fiber cable,” OFC 2023, paper Tu2C.4.

[2] C. Antonelli et al., "Space-Division Multiplexed Transmission from the Lab to the Field," European Conf. Optical Communication (ECOC), 2024.

[3] Y. Seki et al., "Which Multi-Core Fiber Layout is Best for Highest Capacity?" Technical Report, 2023.

[4] H. Takehana et al., "Connection technologies for 100 µm cladding 4-core MCF," EXAT 2025, Paper P-4.

[5] TeleGeography, “Used International Bandwidth Reaches New Heights,” blog.telegeography.com, Oct. 2024. [Online]. Available: https://blog.telegeography.com/used-international-bandwidth-reaches-new-heights

[6] B. Zhu, "SDM Fibers for Data Center Applications," Optical Fiber Communication Conf. (OFC), Paper M1F.4, 2019.

[7] EXAT Technical Committee, "EXAT Roadmap ver.2 – 125 μm Cladding Multi-core Fiber Progress," EXAT Consortium Technical Report, 2020.

[8] N. Wada, "The underlying limits of networks that feed FTT-x," FiberConnect APAC Conference, 2024.

[9] Microsoft Azure, "Microsoft acquires Lumenisity, an innovator in hollow core fiber (HCF) cable," Microsoft Official Blog, Dec. 2022. [Online]. Available: https://blogs.microsoft.com/blog/2022/12/09/microsoft-acquires-lumenisity-an-innovator-in-hollow-core-fiber-hcf-cable/

[10] K. Miller, "Emerging Trends in Optical Fiber: Hollow-core and Multicore," M2 Optics Industry Blog, 2024. [Online]. Available: https://www.m2optics.com/blog/emerging-trends-in-optical-fiber-hollow-core-and-multicore-fibers

[11] R. Mears, K. Harrington, W. J. Wadsworth, J. M. Stone, and T. A. Birks, "Multi-core anti-resonant hollow core optical fiber," Optics Letters, vol. 49, no. 23, pp. 6761-6764, Dec. 2024, doi: 10.1364/OL.543062.

[12] S. Kajikawa, T. Oda, K. Aikawa et al., “Characteristics of over 600-km-long 4-core multicore fiber drawn from a single preform,” Optical Fiber Communication Conference (OFC), Paper M3B.5, Mar. 2023.

[13] Fujikura Ltd., "Advanced connection technologies for multi-core fibers," Fujikura Research & Development, [Online]. Available: https://www.fujikura.co.jp/en/research/connection/

[14] Y. Tian, Z. Hu, B. Li, et al., "Applications and Development of Multi-Core Optical Fibers," Photonics, vol. 11, no. 3, p. 270, 2024.

[15] H. Yuan, M. Xu, et al., “Experimental Investigation of Inter-Core Crosstalk Dynamics in Trench-Assisted Multi-Core Fiber,” arXiv:2008.08034, 2020.

[16] NTT Corporation, "Lineup of multi-core optical fiber construction, operation, and maintenance technologies," Press Release, Nov. 2024. [Online]. Available: https://group.ntt/en/newsrelease/2024/11/15/241115a.html

[17] L. Benzaid et al., "Field Trial of 12-Core Fiber Submarine Transmission," Optical Fiber Communication Conf. (OFC), Post-Deadline Paper, 2023.

[18] Omdia Research, "Multicore fiber being commercialized for an undersea network as an example of terrestrial benefits," Technical Brief, 2024. [Online]. Available: https://omdia.tech.informa.com/om128310/multicore-fiber-being-commercialized-for-an-undersea-network-is-an-example-of-how-such-solutions-may-be-of-benefit-to-terrestrial-networks

[19] Optics.org, "Hollow-core fiber startup targets AI hyperscalers," Industry Report, Feb. 2024. [Online]. Available: https://optics.org/news/16/2/13

[20] Sumitomo Electric, "Sumitomo Electric to Exhibit at ECOC Exhibition 2024," Corporate Press Release, Sep. 2024. [Online]. Available: https://sumitomoelectric.com/press/2024/09/prs040

Get in Touch

For inquiries, feedback, or partnership opportunities, please complete the form.

We’ll get back to you as soon as possible.